Whether to use generative AI in B2B GTM strategies or operations is no longer a question. The question is how to best use this technology.

The biggest hindrance in using generative AI and large language models (LLMs) is their bias and hallucination. These models have this tendency to ‘fill in’ for missing information that is inaccurate at times.

In this article, we’ll discuss how to tackle both the challenges and prevent your LLMs from lying to you.

What are some Popular LLMs in 2025?

OpenAI launched ChatGPT on November 22, 2022, and it forever changed the world of AI.

The race to create Large Language Models (LLMs) capable of generating human-quality text, translating languages, and writing creative content intensified. Beyond just text, models even emerged that can generate images and videos based on prompts!

Some of the most powerful and prominent public LLM models today include:

- GPT-3.5 series (OpenAI): Its creative text generation capabilities are just next level. Most widely used LLM, GPT-3.5 excels at crafting different writing styles, from poems to code.

- Jurassic-1 Jumbo (AI21 Labs): This model gives you the best factual language understanding and question-answering abilities, making it a good choice for research tasks.

- Bloom (BigScience): An open-source LLM known for its factual accuracy and ability to translate languages. It's a great option for users seeking a transparent and cost-effective solution.

- PaLM 2 models (Google AI): Still under development, these models show promise in handling complex reasoning and multimodal tasks that combine text and images.

Cost Associated with LLMs

For all their promise, LLMs can be a frustrating enigma sometimes. For example, different public LLM models charge subscription fees for their usage or have pay-per-use structures.

The cost associated with different LLMs can quickly add up, making it expensive to experiment and find the optimal fit. This becomes even more crucial for businesses trying to transform themselves digitally for optimum productivity and efficiency with generative AI in their workflow.

Bias and hallucination with LLMs

Agony doesn’t end only with cost, LLMs are also prone to hallucinations, generating factually incorrect or nonsensical outputs. In pursuit of providing a response to the query, these models sometimes produce fake facts on their own. It happens because:-

- Real-world bias: LLMs are trained on massive amounts of text data scraped from the internet. This data can be messy and include factual errors, stereotypes, and misleading information. These biases can creep into the model's responses.

- Incomplete information: No dataset is perfect, and there will always be gaps in the information an LLM is trained on. When prompted on a topic with limited data, the model might make up information to fill the gaps, leading to hallucinations.

- Statistical nature: LLMs learn by analyzing the probabilities of words appearing together. This doesn't necessarily mean they understand the true meaning behind the words. So, they can sometimes generate seemingly coherent text that lacks factual grounding.

- Context issues: LLMs are good at understanding short-term context, but reasoning about complex situations or long sequences of events can be challenging. This limited context can lead them to make incorrect inferences and hallucinations.

Data Privacy Issues with LLMs

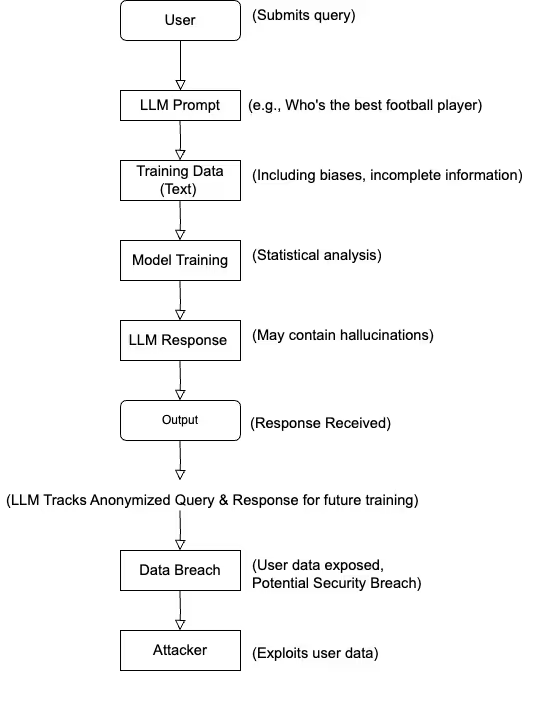

Another concern that everyone is aware of but not willing to talk about are data privacy and security. Breaches can expose sensitive information and compromise user trust. Many LLMs track user queries and responses to improve their performance. This can be beneficial for model development, but it's a double-edged sword. While anonymized data can be helpful for improving the model, it raises privacy concerns.

If this data isn't anonymized properly or falls into the wrong hands, it could be used to:

- Identify users: Personal queries or those revealing identifying information could be used to track individuals if not anonymized.

- Exploit personal information: Scammers can exploit user data to launch phishing attacks, spam campaigns, or even create deep fakes.

- Train other models for malicious purposes: User data could be used to train other LLMs for malicious purposes, like spreading misinformation or creating targeted disinformation campaigns.

How to tackle Data Privacy Issues and Bias?

Data privacy is a top priority, and we agree. However, organizations also need ways to ensure the security and integrity of how data is used within their walls. Often, they struggle to track employee LLM usage and lack control mechanisms to prevent misuse or optimize resource allocation.

Here’s how to ensure that your LLMs act as you want:-

- Query Formulation: Craft clear and concise prompts for your desired LLM task. This might involve specifying the type of content you need (e.g., poem, code, factual summary), the desired length, and any relevant context.

- Quality Control: Different public LLMs have their strengths. Experiment and figure out which model works best for specific tasks. Factors like factual accuracy, creative writing ability, or code generation expertise might influence this selection.

- Multi-LLM Orchestration: Despite identifying which LLM works best for a task, don’t just rely on one model. Combine outputs from multiple LLMs to deliver the best possible results. For instance, you can combine the factual accuracy of one LLM with the creative touch of another so that you get the best of both worlds.

Generative AI is still an emerging technology, and it is going to mature from here. The key to using the technology for the maximum output is to keep experimenting and evaluating. Figure out what’s working for you and what's not. Don’t overshare with these models, but ensure that you provide enough context to give your desired output.